Share This Page

Share This Page| Home | | Psychology | |  |  |  Share This Page Share This Page |

Copyright © 2013, Paul Lutus — Message Page

Most recent revision: 02.18.2013

Click here for a PDF version of this article.

(double-click any word to see its definition)

Reality must take precedence over public relations, for nature cannot be fooled.

— Richard FeynmanIn 1992 Beth Rutherford, a cancer unit nurse from Missouri, sought psychological counseling to relieve job-related stress. But as things turned out, the therapy greatly increased her stress level — over time Beth's therapist persuaded her that she had been repeatedly raped and impregnated by her father while her mother held her down, and that she had been forced to self-abort using a coat hanger. When these claims were made public, they created a swath of destruction — the father, a clergyman, was forced to resign his position, the daughter's ability to function was shattered, and a family was destroyed.

At the time of these shocking revelations, a new method called Recovered Memory Therapy (RMT) had become popular among clinical psychologists, and Beth's therapist practiced RMT. According to those who practice RMT, we involuntarily suppress memories of traumatic events, and the more traumatic the events, the more we suppress them.

At the height of RMT's popularity, thousands of similar accusations surfaced worldwide — stories of multiple rapes, satanic rituals, bizarre behavior behind closed doors, all suppressed from conscious memory, and only recovered because of the new therapy. Sympathetic governments paid for treatments, then paid millions in damages to the victims of these terrible, repressed crimes.

Then the RMT edifice began to crumble. Some of the stories fell apart on their own, others were retracted by the "victims" who, over time, realized they had been talked into imaginary "memories" by their therapists. Beth Rutherford's story came apart the day she was tested and found to be a virgin, years after her sincere, heartfelt account of having been brutally raped and impregnated by her father, and long after permanent damage was done to a family.

The Recovered Memory Therapy fad, now called a "debacle" by some psychology insiders, did enormous harm to the field of psychology as well as to the public, but it didn't produce reform — instead, after a brief interlude, a new psychological fad has taken hold (autism spectrum overdiagnoses) that in retrospect may make RMT seem like a walk in the park (more on this topic below).

At this point my readers may ask how this is possible — how can professional psychological treatments cause so much deception, injustice and harm? Isn't psychology a science like medicine, governed by research and evidence?

In this article we'll explore these issues, discover what psychology can and cannot do, and consider remedies for the problems psychology has created for itself and is inflicting on all of us.

Because science lies at the heart of psychology's problems, let's start by examining the basics of science — how we decide whether something is, or is not, scientific.

First, science is not facts and discoveries — those are the fruits of science. Science is a discipline, a set of tools we can use to establish that something is likely to be true. We can never use science to prove an idea true, but through experiments and logic, we can sometimes prove an idea false. This view of science was best expressed by David Hume, who said, "No amount of observations of white swans can allow the inference that all swans are white, but the observation of a single black swan is sufficient to refute that conclusion."

On first hearing this description of science, many people find it overly skeptical and limiting — is it true that science can only ever prove things false, not true? Yes, in exchange for the benefits of science, we need to understand and accept what science cannot do. We need to understand why scientists are naturally skeptical and assume an idea is false until there is supporting evidence.

Skepticism

To show why a skeptical outlook — the precept that an idea is assumed to be false until there is supporting evidence — is necessary for science, let's consider the opposite, that everything is assumed to be true unless proven false. If we adopt this position, then Bigfoot must be real, because no one has proven it false. But to categorically prove Bigfoot false would require proving a negative, which is an impossible evidentiary burden (read about Russell's Teapot to understand why). Therefore, to avoid accepting the truth of absolutely anything, the only rational posture toward untested ideas is to assume they are false unless there's evidence to support them. In science, this precept is called the "Null hypothesis."

My readers may wonder why it's important to define science so carefully — isn't this just a silly argument between philosophers drinking tea? Well, no, in fact a great deal is at stake. Many professions want the prestige and income that accompanies the status of science. Many commercial products can only win acceptance if they're backed up by science. And many people have beliefs they want labeled "science" for political and ideological reasons.

Science defined

In 1981 a group of Creationists (believers in the literal truth of the Bible) passed a law in Arkansas that required public schools to teach "Creation Science", the idea that human beings were created by a supreme being and did not descend from "lower" animals. The new law was quickly tested in court, and in a landmark ruling, Judge William Overton ruled against the Creationists by way of a concise definition of science. In McLean v. Arkansas Board of Education, Overton ruled that the essential characteristics of science are:

- It is guided by natural law;

- It has to be explanatory by reference to natural law;

- It is testable against the empirical world;

- Its conclusions are tentative, i.e. are not necessarily the final word; and

- It is falsifiable.

Many variations of this basic recipe have appeared in more recent legal rulings, but in both legal and practical terms, the above aptly distinguishes science from non-science — in short, a legitimate scientific idea must be testable against nature, can never ascend to the status of truth, and is potentially falsifiable in practical tests. I should add that, if an idea can't be tested in a practical way, then the fact that it might fail such a hypothetical test can't help its scientific standing. My reason for adding this caution will become obvious later on.

The "Kansas Test"

What do the phrases "explanatory by reference to natural law" (item 2 above) and "testable against the empirical world" (item 3 above) mean, and why are they important? Well, many theories agree with each other but not with nature — such theories aren't scientific on the ground that they can't be tested against reality. I call this the "Kansas test" — with reference to a now-famous line from The Wizard of Oz: "Toto, I've a feeling we're not in Kansas anymore" — if an idea cannot be tested against reality, then it's not meaningful science.

In a later legal ruling — Kitzmiller v. Dover (2004) — the issue of empirical testability became pivotal to the outcome. Not to oversimplify a complex case, but in Kitzmiller v. Dover, a thinly disguised version of Creationism called "Intelligent Design" (ID) was being forced into science classrooms by religious fundamentalists. In a pivotal exchange, ID proponent Michael Behe replied to a question about astrology this way:

“Under my definition, a scientific theory is a proposed explanation which focuses or points to physical, observable data and logical inferences. There are many things throughout the history of science which we now think to be incorrect which nonetheless ... would fit that definition. Yes, astrology is in fact one, and so is the ether theory of the propagation of light, and ... many other theories as well.”The "Astrology Test"

The above reply, and a few others of the same kind, lost the case for ID's proponents, because they failed what I call the "astrology test" — simply put, if a scheme accepts astrology as science, then it's not a meaningful way to distinguish science from non-science.

To define a scientific field, we must incorporate and extend the definition of a scientific idea. Where a scientific idea needs to be testable and falsifiable, a scientific field must meet these additional requirements:

- A scientific field must be defined by theories that are testable and falsifiable.

- Research in the field must address the field's theories.

- If the field has practical applications, the applications must agree with the field's theories.

Let's examine these requirements:

Requirement 1: "A scientific field must be defined by theories that are testable and falsifiable." Here's an example that shows how a scientific field can emerge from a scientific idea:

- While studying moths, I notice that, over time, successive generations of moths that live in dark surroundings are more likely to be dark-colored, and moths that live in light surroundings are more likely to be light-colored (see Peppered moth evolution).

- By careful study I establish that individual moths don't change colors, but moths that are by chance born with a coloring that matches their surroundings are less likely to be seen and eaten by birds.

- At this point I have created a scientific idea. I have established that moths survive by having a coloring that matches their surroundings. And I might later discover that my idea no longer holds statistically over a larger population, or a different population — therefore it's falsifiable in principle.

- Then I use my idea to create a theory. My theory says that all living things — not just moths — survive by being born with random traits that make them more or less suited to their environments, and those creatures having favorable traits tend to have more offspring.

- While my idea could only describe, my theory is able to explain what was described, and it has the property of generality — it applies, not just to moths, but to all living things. And my theory retains the essential requirements for science — it can be tested against nature and potentially falsified.

- I can use my scientific theory to define a scientific field.

Requirement 2: "Research in the field must address the field's theories." Is this really necessary? Yes, it is. Here's why:

- Let's say I'm an astrologer and I'm angry that astrology is not regarded as a true science. It's true that astrology makes claims that aren't tested, or that fail the tests, but I don't think that's sufficient reason for people to say that astrology isn't a true science.

- So to remedy this injustice, I perform a scientific study — I perform a statistical survey to determine how many of each astrological sign live in the population. My study will have practical value to other astrologers, who now can order supplies with a better understanding of their customers. And the study is perfectly scientific — it uses well-established statistical methods and analysis.

- Does my population survey make astrology a science? No, it doesn't. Why not? Because, even though it is scientific, it doesn't test astrology's defining theories (for example, that the position of the planets rule our daily lives). It can never be more than a scientific study of an unscientific field.

- Therefore Requirement 2, "Research in the field must address the field's theories," must be part of the definition of a scientific field.

Requirement 3: "If the field has practical applications, the applications must agree with the field's theories." Why? Here's why:

- Let's say I'm a doctor and I have a theory that doesn't agree with the theories of my own field. I want to incorporate my theory into my practice, because I sincerely believe it will be beneficial to my patients. I am educated, sincere, and a trained doctor.

- My theory is that I can cure the common cold. My treatment is to shake a dried gourd over the patient until he gets better. My treatment always works — I shake the gourd, the patient gets better. The treatment sometimes requires a week, but it always works. The correlation between my treatment and the patient's condition is 100% reliable.

- Critics complain that I don't have a control group, whatever that is, but those people are just jealous of my success in curing the common cold, for which I deserve a Nobel Prize.

- Some constipated control freaks at the local medical board are planning to pull my license, but they very clearly don't recognize my genius. These critics apparently have forgotten that they laughed at Einstein (relativity), they laughed at Wegener (plate tectonics), and they laughed at Bozo the Clown ... umm, never mind that last one.

- This example shows why a field that doesn't — or can't — control the behavior of its practitioners hasn't earned the public's trust and doesn't deserve to be called a science.

The deeper meaning of the three requirements above is that they produce unification and correspondence between related scientific fields and their theoretical foundation. A classic and oft-quoted example of theoretical unification is physics, which has a small handful of very powerful, well-tested theories that both unify and define a large number of subfields, as well as disciplines associated with physics that aren't themselves sciences, for example civil and electrical engineering and aeronautics.

Theoretical unification

The unifying effect of physical theory is shown by the partnership between cosmology and particle physics, fields that at first glance seem unrelated. Cosmology studies events and processes at a very large scale — billions of light-years of size, billions of years of time, vast amounts of mass and energy. Particle physics studies events at a scale that can only be described as nanoscopic, unimaginably small. And yet, because of the power of current physical theories, cosmologists need to know what particle physicists are working on, and particle physicists need to know what cosmologists are working on. Scientists in these seemingly unrelated fields attend each other's conferences and read each other's journals.

For example, when particle physicists discovered that neutrinos have mass, this had immediate and profound effects on cosmology — our understanding of how stars evolve had to be revised. And when cosmologists discovered dark matter by observing the motions of galaxies, this triggered an immediate search for dark matter candidates by particle physicists, a search that continues today.

Applied science

Turning to the issue of applications (requirement 3 above), civil engineering is an example of applied physics. Civil engineers aren't scientists, but to be safe and effective they must pay attention to the scientific theories of the parent field. When a civil engineer builds a bridge, she either pays attention to physical theories, or she risks public safety and might be prosecuted. When an aeronautical engineer designs an airliner, he must pay attention to the same physical theories that bind his field to civil engineering, to cosmology and to particle physics.

It is an established historical trend in science that theories make more and more predictions based on fewer and fewer first principles. The most successful scientific theories predict a large percentage of everyday reality with only a handful of principles and corollaries. The least successful scientific theories require many assumptions to make only a few predictions, and sometimes correspond poorly, or not at all, with other scientific fields.

Apart from physics, already described, other examples of theoretically unified scientific fields include:

- Chemistry, which uses a handful of theories to explain much of everyday life, both organic and inorganic, from a chemical perspective. Chemistry is also very closely associated with physics, indeed it is said that modern chemistry is an outgrowth of quantum theories. Chemistry is sometimes called the "central science" because it links physics, biology and geology.

- Biology, which has two key unifying theories — evolution, which explains events on a large scale, and cell biology, which explains events on a small scale. These two theories work together in genetics, which explains things on both large and small scales.

- Geology, which has a number of interrelated theories and, like biology, operates on large and small scales. Plate Tectonics, a sub-theory of geology, offers an explanation of the changing shapes of land masses, earthquakes and some properties of volcanism.

- Medicine, which concerns itself with human health and functioning, relies on biology and chemistry for its theoretical underpinnings. Neuroscience, a sub-theory of medicine, studies the brain and nervous system, and may eventually replace psychology as the preferred approach to analyzing and treating what are now called "mental" disorders.

All these disciplines depend on research to test existing theories and create new ones. And research, in turn, depends on objectivity — a word with two meanings relevant to the present context. Research must be:

- objective in the sense that we must dispassionately examine evidence without personal bias.

- objective in the sense that an observation will lead two or more similarly equipped observers to the same conclusion.

Figure 1: Which blue line is longer?

Click image to find out.It is said that science is, not so much knowing, as knowing that we know. Simply observing nature and shaping theories is not science — there's more to it than that. The problem with simple observation is that people almost always have a preconceived notion of what "ought" to be so, or they draw premature conclusions, or their senses fool them. Richard Feynman, a philosopher of science as well as a world-class scientist, said, "The first principle is that you must not fool yourself, and you are the easiest person to fool."

To see whether you're immune to perceptual distortions, examine Figure 1 at the right. Which blue line is longer?

Objectivity

Science has many methods to guard against our human tendency to distort what nature tries to tell us, but the first line of defense is a scientist's willingness to set aside personal biases and preferences, and to sincerely ask whether an experimental outcome really means what it seems.

In the early days of science, a typical scientist was a wealthy individual who had no incentive to cheat and who only wanted to find out how nature worked. But in modern times, science's landscape has changed completely — those who achieve scientific breakthroughs may become rich and famous, so there's a strong incentive to exaggerate scientific results or simply invent results for personal gain.

But absent the distorting effects of possible fame and fortune, many things can go wrong in research. Scientists might mistakenly think a correlation between events A and B means that A caused B, when in fact B might have caused A, or both A and B might be the result of some unevaluated cause C. This problem is summarized in the saying "correlation is not causation."Errors and Corrections

Fortunately, mainstream science is largely self-correcting — cheaters can't prevail for long because other scientists will try to duplicate and hopefully confirm published results (such an experimental confirmation is called a "replication"). This self-correcting possibility is present in fields that aggressively repeat the studies of others, i.e. physics, chemistry, biology, medicine and a few others. Some fields rarely try to duplicate the work of others, and this represents a danger that people may cheat — they may publish results that either cannot be duplicated for practical reasons, or that are not what they seem.

Here are examples that show how mainstream science deals with cheaters:

- In the now-famous Schön scandal, a researcher at prestigious Bell Labs reported a series of remarkable semiconductor breakthroughs. But because his work could not be replicated, he was eventually exposed, disgraced and fired.

- South Korean scientist Hwang Woo-suk was regarded as a leading researcher in the field of stem cell research, but his more recent work was found to be fabricated, and Hwang was charged with fraud and embezzlement.

- Leaders of Faster-Than-Light Experiment Step Down. A hasty, poorly analyzed claim about superluminal neutrino speed attracted worldwide attention, but eventually resulted in the firing of those responsible.

The first two examples are of researchers who simply tried to play the system and were caught, but the third is more interesting — it attracted much attention among physicists by claiming that a bedrock physical principle (the speed of light) might not hold in all cases. But the outcome (resignations in disgrace) resulted from the originators' willingness to promote a dubious preliminary result as though it represented solid experimental evidence.

Lex parsimoniae

In mainstream science, researchers are expected to examine their experimental setups and assumptions very carefully before publicizing an "anomaly" like the neutrino result above — does the anomaly actually represent what we think? Is there a pedestrian explanation (like a loose wire) that's more likely than the exotic one? Medical students have a saying about this: "When you hear hoofbeats, think horse, not zebra" — meaning don't jump to an improbable conclusion. There is also a scientific precept called "Occam's razor" or lex parsimoniae (the law of parsimony) that makes the same point — in many cases, the simplest explanation turns out to be the right one. And in all cases, the simplest explanation consistent with the evidence is to be preferred.

P-value

Science isn't meant to be adversarial like law and (at least in principle) it isn't about self-promotion. In normal research, workers are expected to keep an open mind and consider alternatives to the effect they're studying. Consistent with this open philosophy, research papers include a "p-value", a number meant to assess the probability that an experimental outcome arose by chance rather than from the effect being studied.

For example, if I flip a fair coin eight times and get eight heads outcomes, that's unlikely but not astronomically so — it has a p-value of 1/256, meaning the outcome might have arisen by chance with a probability of 0.0039. If I published my coin-flip experiment, I would include the phrase "p <= 0.0039" to show that I had performed a statistical analysis, and 0.0039 is the probability that the null hypothesis (that there is no effect apart from chance) is confirmed.

I apologize to my readers if this seems a bit technical, but the p-value issue is critical to understanding the difference between mainstream science and psychological science. Scientists are expected to bend over backward to consider all possibilities and avoid drawing conclusions not supported by the evidence. And in mainstream science, a finding that's regarded as significant must be accompanied by a relatively small p-value. For example, at the time of writing physicists are searching for evidence of the Higgs Boson, a missing part of the Standard Model, a blueprint of reality crafted by physics over decades. Because of the importance of the Higgs, an experiment that claimed to have discovered it must have a p-value < 5σ ("less than five sigma") or a probability less than 2.8 x 10-07 of coming about by chance (roughly one in four million). The term "sigma" (σ) refers to a statistical quantity called "standard deviation".

Here is a table and graph showing levels of significance and associated p-values (reference):

Sigma (σ) p-value (one-tailed) 0 0.5000 1 0.1587 2 0.0228 3 0.0013 4 3.1671 x 10-05 5 2.8665 x 10-07 6 9.8659 x 10-10 7 1.2798 x 10-12 Table 1: Sigma (σ) numbers

and associated p-values

Figure 2: Deriving a p-valueThis summarizes the practice of mainstream science — a focus on evidence, a deep awareness of what can go wrong, factors that might lead us to draw the wrong conclusion or overestimate the meaning of a result. In publishing a result, a scientist sincerely tries to include all the factors that might undermine a given result or render it meaningless, rather than wait for a critic to do it for him.

But not all sciences work to this high standard. There is a science spectrum, ranging from physics at one extreme, where standards are very high and a seemingly trivial error can end one's career, to psychology at the other extreme, where no one seems to care how low the standards become. Picturing that spectrum is our next topic.

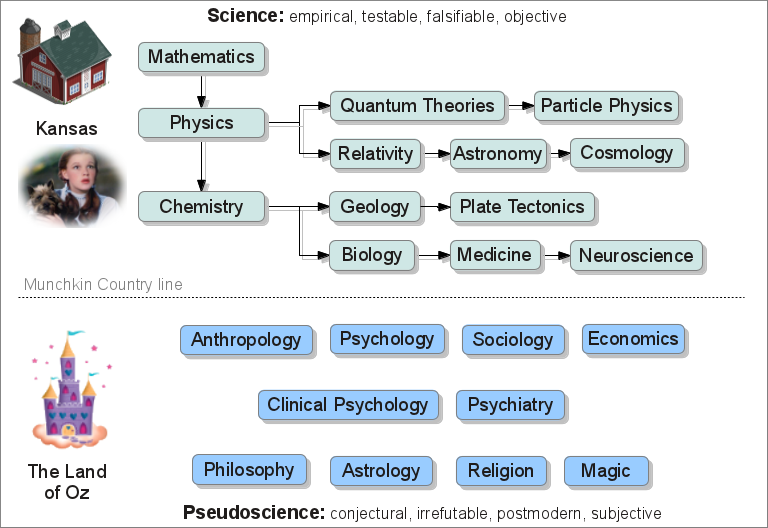

Before starting a commentary on, and analysis of, psychological science, let's pause for a look at the landscape of science, pictured as a spectrum of disciplines lying between Kansas (reality) and the Land of Oz (fantasy). One extreme, Kansas, represents idea validation by direct observation of nature, and the other extreme, the Land of Oz, represents a perfect indifference to reality-testing. In this portrayal, all scientific and pseudoscientific theories and disciplines lie somewhere on the spectrum between Kansas and the Land of Oz.

I chose Kansas and the Land of Oz to represent reality and fantasy respectively, because the highest quality science, that based on objective, dispassionate observations of nature, is often the least interesting to nonscientists, while the poorest quality science, including ideas having no scientific basis at all, tend to be topics that fill the majority of air time on the Discovery and "Science" TV channels — wormholes, multiple universes, UFOs, Bigfoot sightings, Loch Ness monsters, and ghost hunters. I think while watching what passes for science on American television, Dorothy would want to say, "Toto, I've a feeling we're not in Kansas any more."

Figure 3: A simplified science spectrum

A few comments on Figure 3: At the top of the image are mainstream sciences, sciences connected to each other in a way that, if a new discovery is made in any of them, it's likely to have repercussions in some or all the others. The arrows are meant to show the primary direction of influence (not to exclude other less significant vectors) between fields — mathematics to physics, physics to chemistry, and so forth.

The graphic is not meant to be comprehensive or all-encompassing, only to be suggestive and show an outline of some sciences, in particular those this article analyzes. And to avoid making the graphic too complex, important reverse vectors are omitted, for example between physics and mathematics, and between cosmology and particle physics.

Readers may wonder why there are no connections between the sciences below the Munchkin Country line (separating Kansas from the Land of Oz) as there are above the line — for example, why is there no connection between psychology and clinical psychology? At risk of expressing a tautology, the reason there is no connection shown between psychology and clinical psychology, is simply because there is no connection between psychology and clinical psychology in reality. Clinical psychologists regularly claim scientific status for their activities on the ground that psychology is scientific, but many experimental psychologists go out of their way to disown clinical psychology at every opportunity. And it's uncontroversial that experimental psychology has precisely no effect on, and exerts no control over, either clinical psychology or psychiatry.

As to the other sciences in the lower half of the graphic, they're not connected in any meaningful way, certainly not in the way that mathematics, physics and chemistry are connected. Indeed, while many "Land of Oz" fields claim scientific status for themselves (as both clinical psychology and psychiatry do), with equal vigor they deny the scientific standing of the other.

The true meaning of the Munchkin Country line can be simply stated: when reality-testing proves a theory false, those above the line abandon the theory, while those below it abandon reality.

I ask that my readers suspend judgment of my seemingly harsh treatment of those "sciences" below the Munchkin Country line until they've read the following sections.

The study of the mind

With respect to science, human psychology faces an immense obstacle posed by its focus on the mind. Human psychology is defined as "the study of the mind, occurring partly via the study of behavior", but the mind is not a physical organ, it's an abstract concept, and measurements of the mind's state are indirect and subjective (by way of a subject's verbal reports, for example). This makes psychology, as defined, a branch of metaphysics, not physics.

Remember that, in the earlier definition of science, it was established that legitimate science must be empirical, based on observations of nature. One advantage of empirical science is that two or more observers should draw the same conclusion from given observations (objectivity), and by the same reasoning, a falsification should be accepted by all. But the mind is not a thing, it's an idea — it's not part of the empirical world, and is therefore not amenable to empirical study.

Psychologists argue that this isn't a practical limitation to science, because to circumvent it they can attach EEG electrodes to a subject or place a subject in a high-tech brain activity scanner. This is an obvious way to improve the quality of evidence, but it abandons psychology (study of the mind) in favor of neuroscience (study of the brain), as well as increasing the already wide gap between theoretical psychology and clinical psychology.

Notwithstanding a conspicuous and gradual swing away from psychology toward neuroscience, the majority of published psychological research remains study of the mind and behavior, which partly explains the poor connection between psychological science and that other kind of science ... the real kind.

Pitfalls in Human Studies

Earlier I listed some experimental procedures meant to support objective outcomes in cases where bias or interpretation errors might influence an experiment. But in studies with human subjects, much stricter safeguards must be present to avoid errors. For example, if researchers or subjects know what is being studied and what the expectations are, this often undermines the study and renders the results worthless. Because of this, there are far fewer worthwhile human studies than studies with animals or test-tube experiments. The best kind of human study — the "gold standard" for such studies — has these traits:

- Experimental and control groups should be drawn from a uniform population in advance of the study (a "prospective study" design).

- The experimental and control groups should both be large and as much alike as practical.

- Neither the experimenters nor the subjects should know to which group a given subject belongs (the "double-blind" criterion).

- The researchers should state in advance what they are studying, and resist the temptation to change what's being studied based on preliminary results.

- The researchers should commit themselves in advance to publish regardless of the study's outcome (this prevents the temptation to discard experiments that fail to meet the experimenter's expectations).

But in typical psychological research, all these standards are regularly ignored, and many kinds of questionable results are published as though they represent useful science. One regularly sees studies that use a retrospective study design, one in which the study group is selected from a population based on prior behaviors and choices, no double-blind safeguards, and no control group.

Intelligent vitamins

Here's a hypothetical example that shows why retrospective studies are so unreliable:

- A study is planned to test for a connection between taking a daily vitamin and improved intelligence.

- The study's designers realize it would be unethical to design a prospective study with a control group, because the control group would necessarily receive a placebo instead of a vitamin, and they might suffer adverse health effects. Also, a prospective study is much more expensive than a retrospective study. So the decision is made to design a retrospective study instead, a study that examines the behavior of existing members of the population.

- The retrospective study design involves interviewing members of the general population, measuring intelligence and asking if the subjects take a daily vitamin.

- The study concludes that, indeed, there is a positive correlation between taking a vitamin and higher intelligence.

- Therefore, say the study's authors, people who take a vitamin become more intelligent.

My readers should be laughing at the naïveté of this imaginary study's design and conclusions, but if this isn't the reaction, let me explain — the above is a classic confusion of correlation with causation. Maybe the study's result means intelligent people are smart enough to take a daily vitamin because of their better grasp of health issues. The point is that the study cannot distinguish between that cause and the cause the study claims to be studying, or another tertiary cause that produces the same outcome.

How not to do science

I warn my readers that studies like the above are by no means uncommon in psychology. Here's a recent published study that comes to a similarly brainless conclusion:

The study, titled Six Developmental Trajectories Characterize Children With Autism, tracks the histories of autism sufferers and draws conclusions about the beneficial role of therapy, but with no evidentiary basis and no control group. The author's views are expressed in a news account with the headline "10 percent of autistic kids 'bloom' with therapy: study". A quote from the article: "Many autistic children with social and communication problems benefit from intensive therapy and about 10 percent "bloom," enjoying rapid improvement in social skills as they grow older, U.S. researchers said on Monday ... Fountain [the study's author] said the findings suggest that providing equal access to the best autism treatment for minority and less well-off kids will be important going forward." (emphasis added)

But the study does not support these claims in any way. The above represents the opinions of the study's author, not the outcome of scientific research. The news story even acknowledges that "The researchers did not have information about the specific treatment each child received." And the original article says, "Although we are unable to identify the specific mechanisms through which socioeconomic status affects trajectory outcomes, the intervening variables likely include home and neighborhood environments, quality and intensity of treatment, quality of education, the efficacy with which parents are able to advocate for their children with institutions providing services, and many other factors in various permutations." (emphasis added)

Hmm — does the key phrase "likely include" sound like a scientific basis on which to draw a conclusion? This retrospective study has no control group, a group whose history might be compared to an experimental group, no blinding precautions to prevent bias on the part of the investigators, and has no basis to support the conclusions the author is quoted as claiming for the study, conclusions that are contradicted by the author's own words in the body of the article.

In the author's tendentious analysis, 10% of the tracked subjects are said to greatly improve over time and the improvement is credited to intensive therapy. But other subjects remained the same over time, and still more may have declined in condition during the study. Did these others also receive "intensive therapy"? The study can't tell us, and the author doesn't seem prepared to consider the possibility that all the subjects received equally intensive therapy, or that there's no connection between therapy and outcome (the null hypothesis). And the absence of a control group, a group receiving no therapy for comparison, is fatal to the study's scientific standing for the reason that autistic children are known to improve over time without intervention: Autism Improves in Adulthood : "Most teens and adults with autism have less severe symptoms and behaviors as they get older, a groundbreaking study shows."In short, a study that cannot draw any conclusions about treatment is being used as a soapbox for clinical psychology treatment advocacy by someone who apparently hopes no one will read or understand the original work. This would be shameful and grounds for dismissal in mainstream science, but in psychological science it is routine.

The above is not meant to claim therapy has no effect on autism, only that the claim isn't based on science. And how could it be? No one knows what causes autism, how to reliably diagnose it, or how to treat it. In mainstream medicine, there would be no treatment until a cause was uncovered, an unambiguous set of diagnostic indicators was established, and benefits from therapeutic procedures had been proven in clinical trials. None of this is true for autism, and yet diagnoses are being made, and treatments being offered, without any scientific basis.The reason? It's not science, it's psychological science.

I chose the above study as typical of current professional literature in psychological science, but (as shown below) it's not in any way an extreme case of drawing conclusions with no evidentiary support.

P-values in psychology

Earlier I explained the meaning of a p-value (the probability that a result doesn't support the tested hypothesis), widely used as a measure of an experiment's reliability. I also showed that, in physics, a p-value must be very small (i.e. 5 σ or about one in four million) to allow the inference that a finding may count as a discovery.

In psychology, much poorer statistical indicators are accepted as significant — p-values in the range 0.01 to 0.05 (meaning the result might arise by chance with a probability of 1% or 5% respectively) are commonly regarded as suggesting statistical relevance. But p-value analysis has been widely criticized on multiple grounds — this study comes to the conclusion that "for 70% of the data sets for which the p value falls between 0.01 and 0.05, the Bayes factor indicates that the evidence is only anecdotal" (emphasis added). In this context, "only anecdotal" suggests that such a result is without statistical significance or scientific value.

Sloppy and fraudulent research

Much of psychological science consists of studies of topics so nebulous that any statistical analysis is pro forma and cannot lend weight to the study's conclusions. And more, psychological research is rarely replicated or even examined in any depth by other workers, which opens the door to the possibility of outright fraud. Here are some details of a recent case of egregious psychological fraud:

- Diederik Stapel of Tilburg University has recently been exposed as a fraud and has openly acknowledged this. Stapel is quoted as saying, "I have failed as a scientist and researcher."

- Quoting from a coordinated investigation by three involved universities, "The Committee has arrived at the conclusion that the extent of Mr Stapel’s fraud is very substantial. The Committee has already encountered several dozen publications in which use was made of fictitious data."

- Because Stapel had overseen the activities of "more than a dozen" doctoral candidates, these programs and (in some cases) doctoral degrees will have to be reviewed for evidence of fraud.

- The initial committee investigating Stapel originally wanted to strip him of his doctoral degree, but "... the Amsterdam committee has been unable demonstrably to establish any fraud with research data, partly because the data has been destroyed."

- How could Stapel's fraud go unrecognized for so long? That's easy to answer — just look at the topics he studied: "Dr. Stapel has published about 150 papers, many of which [...] seem devised to make a splash in the media. The study published in Science this year claimed that white people became more likely to 'stereotype and discriminate' against black people when they were in a messy environment, versus an organized one. Another study, published in 2009, claimed that people judged job applicants as more competent if they had a male voice." How does one meaningfully replicate or falsify such claims?

- In its final report, the three university panels that investigated the Stapel affair cited far-reaching problems with the field of psychology itself, describing sloppy research practices, scientists who simply don't understand statistical methods, journal editors who refuse to publish negative outcomes, and a willingness to print results that are obviously too good to be true.

- But the Stapel case is only an extreme example of common practice. A quote from the New York Times article: "In a survey of more than 2,000 American psychologists scheduled to be published this year, Leslie John of Harvard Business School and two colleagues found that 70 percent had acknowledged, anonymously, to cutting some corners in reporting data. About a third said they had reported an unexpected finding as predicted from the start, and about 1 percent admitted to falsifying data." (italics added)

- The remark above about reporting "an unexpected finding as predicted from the start" refers to a widespread psychological practice in which a study is begun on one topic, but something unexpected or more interesting appears, so the study is redefined in mid-stream to study that instead. The general term for this is "data mining," which in psychology means throwing an opportunistic research net over murky waters to see what's dragged in.

- In violation of ethical guidelines, many psychology researchers refuse to publish their data along with their results. Quoting from a study of data sharing practices : "We related the reluctance to share research data for reanalysis to 1148 statistically significant results reported in 49 papers published in two major psychology journals. We found the reluctance to share data to be associated with weaker evidence (against the null hypothesis of no effect) and a higher prevalence of apparent errors in the reporting of statistical results." (italics added)

I emphasize that the above shocking revelations are with respect to the research arm of human psychology, the arm that sees itself as scientific — in contrast to clinical psychology and psychiatry, which are generally seen as much less scientific and evidence-based.

To conclude this section, when we compare psychological science to how science is defined — empirical, based on observations of nature, objective, tentative, falsifiable — we're forced to the conclusion that psychological science is not science. I ask my readers to remember the following when they hear the phrase "psychological science":

Psychological science is to science what military music is to music.

Many psychological treatments have come and gone over decades — therapy based on Freudian theories, now discredited by all but a small band of loyal followers; psychiatry, falling out of fashion in favor of more short-term, results-oriented methods; and some more recent practices that are the topic of this section. But I ask my readers to notice that none of psychology's therapeutic methods have survived the test of time.

Cognitive-Behavioral Therapy

Cognitive-behavioral therapy (CBT) has a wide following, and there are many apparently bulletproof studies that seem to show its efficacy. But there are an equal number of studies that seem to validate other kinds of therapy, and a recent meta-analysis reveals that all recognized therapies produce similar results, to the degree that there is no basis for preferring any particular therapy.

A Consumer Reports study summarized in this abstract concludes "... no specific modality of psychotherapy did better than any other for any disorder; psychologists, psychiatrists, and social workers did not differ in their effectiveness as treaters; and all did better than marriage counselors and long-term family doctoring." The summarizing article's author comes to this conclusion: "the Consumer Reports survey complements the efficacy method, and that the best features of these two methods can be combined into a more ideal method that will best provide empirical validation of psychotherapy."

But the above abstract and its conclusion fails to take into account the Placebo Effect, the well-established, genuinely therapeutic effect of ineffective treatments if presented in a way that a subject believes they represent meaningful treatment, and the Hawthorne Effect, in which subjects improve no matter what treatment is being offered, solely because a treatment is offered and the subject is getting attention. These disregarded factors, plus the absence of any meaningful experimental controls or control group, suggest that there is no scientific basis for the oft-heard claim that psychotherapy works. Again, as before, this is not to suggest that therapy doesn't work, only that there's no scientific basis for the claim.

A scientific approach to validating psychological therapy would need to have rigid scientific controls, meaning a double-blind, prospective experimental design, a control group, and a willingness to publish the results no matter what they revealed. But it's obvious that this suggestion is hopelessly unrealistic — there are ethical issues involved in offering placebo therapies to unsuspecting subjects, and the double-blind criterion appears to present an insurmountable obstacle, because, for the control group, both therapists and subjects would need to be unaware that the control therapy was a sham. This is obviously unrealistic.

What this means is that, even if psychological science were to improve greatly, these systematic problems would represent an impenetrable glass ceiling (that I labeled the "Munchkin Country line" in Figure 3) that, stated bluntly, would prevent a rigorous science of the mind.

Big Pharma

Because of a huge opportunity for profit, major drug companies do what they can to exploit the softness and flexibility of psychological science to create the impression that psychoactive drugs are more effective than they actually are. For example, many published studies validate the effectiveness of antidepression drugs.

But aware of the distorting effect of selective publication, researchers performed a meta-analysis that combined the results of published studies with other studies that drug companies completed but then decided not to publish. When the unpublished studies are included, the effectiveness of antidepression drugs evaporates. The researchers avoid claiming drug companies intentionally dumped studies that didn't support their beliefs — that conclusion is left to the reader.

To summarize this section, because psychology offers treatments much as doctors do, I suggest that psychology adopt the medical model — before offering clinical treatments, psychologists should fill these conditions:

- Find out what causes a disease

- Establish objective diagnostic criteria that do not depend on individual judgments

- Craft treatments that:

- Address the cause and

- Are validated in clinical trials

That seems reasonable. But to date, in designing diagnoses and treatments, psychologists have never followed the medical model. Psychologists might object and cite lithium for bipolar disorder as a counterexample, but that's pharmacology, not psychology (it treats the brain, not the mind), also we don't know why it works.

The absence of a scientific basis for therapeutic practice hasn't stopped psychologists from offering any number of bogus diagnoses and therapies — Recovered Memory Therapy, briefly summarized in the introduction, Facilitated Communication, a now-discredited practice that seemed to reach unreachable people in a procedure that resembles a high-tech séance, and more recently, Asperger Syndrome.

I predict that Asperger Syndrome (hereafter AS), now being abandoned as a diagnosis, will in retrospect be looked on as one of the most brilliant, yet stupid, diagnoses ever invented by psychologists. It's brilliant because, although it carries the stigma of a mental illness (a label that rational people want to avoid), it also offers naive young people the chance to be associated with some famous, very intelligent people — Thomas Jefferson, Albert Einstein, Bill Gates and others — all of whom are thought to have "suffered" from AS.

But it's stupid in equal measure. It has no basis in science, and one of its symptoms is that its "sufferers" are often brilliant and may well become rich and famous, an outcome that gives a whole new meaning to the word "handicapped." It's stupid because those who advocated for its placement in the DSM (The "Diagnostic and Statistical Manual of Mental Disorders"), psychology's diagnostic guidebook, ought to have foreseen what was going to happen if the diagnosis received official sanction. These people fancy themselves experts in the workings of the human mind — how were they unable to foresee the consequences of promoting a mental illness that includes wealth and fame among its diagnostic indicators?

After its inclusion in DSM-IV and not surprisingly, AS became wildly popular and produced an epidemic of diagnoses, many of which had no basis in reality, even granted the notoriously subjective nature of psychological practice. Finally, desperate to limit the erosion of public confidence in psychology and stem the tide of nonsense diagnoses, the same mental health professionals who advocated for the inclusion of AS in DSM-IV, are now advocating for its removal from DSM-V, slated for publication in May 2013.

Figure 4: The real meaning of "Asperger's"Until the advent of AS, mental illness diagnoses possessed a uniformly negative stigma — they were to be avoided if possible. Therefore for psychologists, the positive associations of AS represent new, uncharted territory — before it, getting people to acknowledge their mental illness has been a uphill battle against public prejudice and fear. But AS turned everything on its head — psychologists were sometimes put in the uncomfortable position of asking, "Do you really think you're suffering from AS? Maybe you're just intelligent." And anyone with a minimum of acting skill can pass for an AS sufferer.

Now that AS is being abandoned, some of those who reveled in their status as mentally ill "but in a nice way", are angry that their faux disease is being cast out, and some refuse to accept the abandonment as real.

Some of my correspondents in the field of psychology, and members of the unofficial AS fan club, dispute my claim that AS is being abandoned, arguing that officially, AS is being folded into the larger "Autism Spectrum Disorder" (ASD) category. That's true, but:

- Allen Frances, editor of DSM-IV and the psychiatrist who originally advocated for the inclusion of AS in the DSM, describes its true incidence as "vanishingly rare." He goes on to say that the inclusion of AS in DSM-IV resulted in an "epidemic" of diagnoses. His response to this news? "At that point I did an 'oops,' ... This is a complete misunderstanding. It was distressing. Quite distressing." His meaning is clear.

- Other mental health professionals, aware that ASD diagnoses are out of control and no longer have any connection to reality, have joined an American Psychological Association task force charged with redefining ASD to stem the tide of nonsense diagnoses. One of those behind the redefinition effort (Dr. Fred Volkmar, director of the Child Study Center at the Yale School of Medicine) says of the diagnosis surge, "We would nip it in the bud."

- These sources suggest that (a) AS was way overdiagnosed and deserved to be cast out, and (b) the move to fold AS into the ASD category, while simultaneously greatly reducing ASD acceptance criteria, shows that the AS diagnosis rate never reflected reality.

Given the absence of scientific evidence for this kind of diagnosis (meaning high-functioning autism), and given the harm that a record of mental illness can do to one's future, why would people want it? Here are some of the reasons:

- As mentioned above, a naive young person, unaware of the long-term price one may pay for having a mental illness diagnosis in one's personal medical records, might be caught up in the fascination of having the same mental illness as a childhood role model (Albert Einstein, Bill Gates), and that, as a side effect, can make one rich and famous.

- Clinical psychologists saw AS as an easy way to get people into therapy, including people whose only defect is an IQ located to the right of the population mean. Present AS diagnostic criteria reliably identify intelligent people and mark them as mentally ill (see Figure 4).

- As psychiatrist Allen Frances points out, "In order to get specialized services, often one-to-one education, a child must have a diagnosis of Asperger's or some other autistic disorder ... And so kids who previously might have been considered on the boundary, eccentric, socially shy, but bright and doing well in school would mainstream [into] regular classes ... Now if they get the diagnosis of Asperger's disorder, [they] get into a special program where they may get $50,000 a year worth of educational services."

- There is a Social Security program that makes disability payments to parents of mentally ill children. One requirement is that the child must have an officially recognized mental illness diagnosis. One of the accepted diagnoses is "Autistic Disorder and Other Pervasive Developmental Disorders", a category that includes AS. And such disability programs can follow one into adulthood.

Given these incentives, many people did all they could to secure the diagnosis, including cheating — by, for example, coaching children in the expected behaviors, a task made easier by how close AS is to normal behavior.

The remission factor

As mentioned earlier, as AS "sufferers" approach adulthood, most spontaneously show improvement for reasons psychologists don't understand. Here's my proposed explanation: some children are either pressured to accept the AS diagnosis, or find the AS diagnosis to be a romantic way to align themselves with famous, accomplished people, or both. But as adulthood approaches and they acquire more experience, it dawns on them that being labeled mentally ill has nothing but drawbacks — so their fascination with, and symptoms of, AS, gradually evaporate.

Rank speculation? Yes, of course — like most of modern psychology. But my reasons for mentioning it are:

- It's an obvious explanation for an observation made by others, and

- Because of what it suggests about AS, I doubt that psychologists will be eager to investigate it.

In a larger sense, I see the AS chapter as part of a long-term program to pathologize normal behavior, one that hopefully will backfire and destroy unscientific psychology.

(Reminder: AS = Asperger Syndrome, ASD = Autism Spectrum Disorder)

A recent CDC report claims that in 2008 the ASD prevalence rate among U.S. children was 1 in 88 (1 in 54 among boys). But this is not a prevalence rate, it's a diagnosis rate — the distinction is critical. Many psychologists now believe that relaxed ASD diagnostic criteria, Asperger Syndrome in particular, have produced an absurdly high diagnosis rate that has no connection with reality.

But, just for the sake of argument, let's say that the CDC report rate really reflects the prevalence of ASD in society, and that the rate continues its present spectacular climb. Among boys, ASD hovers around 2% and is rapidly increasing, and based on that I must ask: at what point will psychologists throw in the towel and acknowledge that they're pathologizing normal behavior? At 10%? 25%?

But this leads to a more important question — when did psychology stop being a service and turn into a proselytizing religion? It seems some psychologists are no longer willing to wait for people to visit their offices to ask for help with specific issues chosen by the clients. Some psychologists have embarked on a program to identify what they deem defective mental functioning, in particular among children, and proactively diagnose and offer treatment for conditions that psychologists deem dysfunctional. And — need I add — without any scientific validation.

But not all psychologists are the sort of opportunist described above. Some earnest, conscientious psychologists feel under pressure to produce diagnoses, for example in some schools a psychological diagnosis is a precondition for special education funds, as a result of which there may be a student diagnosis rate of 10% or more for a spectrum of conditions (learning disability, ASD, ADD, ADHD, NOS), but with little connection to reality.

Because the number of "abnormal" conditions listed in the DSM increases dramatically with each new revision (now 374), the spectrum of non-pathological ("normal") behaviors has narrowed dramatically. Obviously if present trends continue in future DSM revisions, psychology will eventually cross a line beyond which there will be more abnormal than normal behaviors, and even psychologists should be able to predict the consequences of that among an already skeptical public.

Evolution, the cornerstone of modern biology, is at work in modern-day human society, which means in most cases we should resist the temptation to interfere with nature and brand as abnormal what may be genuinely adaptive behaviors. But it seems psychology's intent with the DSM is to assemble a list of behaviors it deems abnormal, and by implication leave a shrinking list of behaviors deemed normal. This program ultimately tries to take control of natural selection, which we could do only if we could reliably outsmart nature. But we're manifestly unqualified for that role — we don't know more than nature, and with respect to the human brain, we know almost nothing.

The present psychological trend toward proactively listing potentially adaptive behaviors as abnormal, without waiting for clients to approach and ask for help, moves dangerously close to something resembling a secular religion, particularly troubling in an institution that has scientific pretensions.

With specific reference to Asperger Syndrome, intelligent people are already burdened from birth in a society where anti-intellectualism is a fact of life. It would be nice to avoid pointless diversions during an intelligent person's formative years, beyond the bullying and ridicule that seem an unavoidable part of growing up bright. But to be falsely labeled mentally ill, using diagnostic criteria with no scientific support, and with respect to a condition having no scientific validation or even an identified cause, seems particularly deplorable.

To put this in the simplest terms, bright children are bullied by dull classmates offended by how different they are, then they're bullied by dull psychologists offended by how different they are. Both groups miss the fact that difference is the engine of natural selection, nature favors intelligence, and a typical modern psychological diagnosis may misread and condemn what in truth is a selective advantage.

To summarize, if I could change how mental health services are managed in modern society, I would tell psychologists to stop using pseudoscience to tell us how sick we are, go into your office and close the door. If we decide we want your services, we'll call you.

Reader responses to this article

- Empirical (Wikipedia) — The meaning of "empirical" as it relates to science

- Falsifiability (Wikipedia) — The concept of "falsifiability" as it relates to science

- Recovered-memory therapy (Wikipedia) — the RMT debacle of the 1990s

- Creating False Memories — Elizabeth Loftus studies false memory

- Public Skepticism of Psychology (PDF) — An insider explains how psychology is seen by the public

- David Hume (Wikipedia)

- Forensic-Evidence: Recovered Memory-P.Nichols — Legal Tools for Preventing the Admission of "Recovered Memory" Evidence at Trial

- Demarcation problem (Wikipedia) — How we distinguish between science and non-science

- Russell's teapot (Wikipedia) — About the impossibility of proving a negative

- Null hypothesis (Wikipedia) — The assumption that an idea without evidence is false

- Arkansas Act 590 — An Arkansas law that mandated teaching of Creationism in public schools

- McLean v. Arkansas (Wikipedia) — A landmark legal decision that used a concise definition of science to outlaw teaching of Creationism in public schools

- The Wizard of Oz (1939 film) (Wikipedia) — My source for the "Kansas test"

- Kitzmiller v. Dover Area School District (Wikipedia) — A more recent pro-science legal ruling

- Michael Behe (Wikipedia) — An ID proponent in the Kitzmiller v. Dover case

- Peppered moth evolution (Wikipedia) — A classic example of evolution on a short time scale

- Correlation does not imply causation (Wikipedia) — a common experimental pitfall.

- Reproducibility (Wikipedia) — The scientific process formally called "replication"

- Schön scandal (Wikipedia) — An infamous example of cheating in science

- Bell Labs (Wikipedia) — A science laboratory with a long tradition of excellent work

- Hwang Woo-suk (Wikipedia) — A disgraced scientist is charged

- Leaders of Faster-Than-Light Experiment Step Down - ScienceInsider — The fallout from a failed neutrino claim

- Occam's razor (Wikipedia) — Also known as lex parsimoniae, the law of parsimony

- Higgs boson (Wikipedia) — An intriguing particle thought to confer mass to matter

- Standard Model (Wikipedia) — The reality blueprint crafted by physics

- Standard deviation (Wikipedia) — A statistical term

- P-value (Wikipedia) — The probability that an experimental outcome results from chance

- One- and two-tailed tests (Wikipedia) — Conventions in statistical analysis

- Psychology (Wikipedia) — The study of the mind

- Neuroscience (Wikipedia) — The study of the brain and nervous system

- Faulty Circuits: Scientific American — NIMH director Insel describes a trend away from psychology toward neuroscience

- Prospective cohort study (Wikipedia) — Another way to improve the quality of experimental evidence

- Blind experiment (Wikipedia) — Meant to minimize errors in human studies

- Retrospective cohort study (Wikipedia) — A less reliable study design

- Six Developmental Trajectories Characterize Children With Autism — A study that tries to connect intensive therapy with positive outcomes, but with no evidence

- 10 percent of autistic kids "bloom" with therapy: study — A media account of the above study

- Autism Improves in Adulthood — Indication of an improvement over time without treatment

- Statistical Evidence in Experimental Psychology — An indictment of psychological results analysis

- Noted Dutch Psychologist, Stapel, Accused of Research Fraud — A shocking exposé of widespread research fraud

- INTERIM REPORT REGARDING THE BREACH OF SCIENTIFIC INTEGRITY COMMITTED BY PROF. D.A. STAPEL — A coordinated investigation of Stapel by three Dutch universities

- Final Report: Stapel Affair Points to Bigger Problems in Social Psychology — three investigative panels move beyond the Stapel affair and find fault with the field of psychology itself

- Data mining (Wikipedia) — the sometimes deplorable practice of hunting for the appearance of significance

- PLoS ONE: Willingness to Share Research Data Is Related to the Strength of the Evidence and the Quality of Reporting of Statistical Results — A strong correlation between reluctance to share research data and doubtful conclusions

- Cognitive behavioral therapy (Wikipedia) — A widely practiced therapeutic method

- Establishing Specificity in Psychotherapy Scientifically: Design and Evidence Issues (abstract) — Poor evidence to favor any specific therapeutic method over others.

- Treating depression with the evidence-based psychotherapies: a critique of the evidence — A study that casts doubt on CBT's efficacy

- A meta-(re)analysis of the effects of cognitive therapy versus 'other therapies' for depression — Concludes that all legitimate therapeutic methods are equally efficacious

- The effectiveness of psychotherapy — In essence, no significant difference between therapies

- Placebo (Wikipedia) — A discussion of the beneficial effect of ineffective substances

- Hawthorne effect (Wikipedia) — Subjects improve no matter what treatment is offered

- PLoS Medicine: Initial Severity and Antidepressant Benefits: A Meta-Analysis of Data Submitted to the Food and Drug Administration — A study questioning the effectiveness of antidepression medications

- Facilitated communication (Wikipedia) — A discredited practice that pretends to reach unreachable people

- Asperger syndrome (Wikipedia) — A diagnosis that is at once brilliant and stupid

- Diagnostic and Statistical Manual of Mental Disorders (Wikipedia) — Psychology's diagnostic guidebook

- Diagnostic and Statistical Manual of Mental Disorders (DSM IV) — The current DSM version

- What's A Mental Disorder? Even Experts Can't Agree : NPR — An evaluation of the Asperger's debacle

- Autism spectrum (Wikipedia) — A large category of autism-related conditions, not all debilitating

- New Definition of Autism May Exclude Many, Study Suggests - NYTimes.com — Implying that past diagnosis rates had little connection to reality

- Social Security - 112.00-Mental Disorders-Childhood — In short, you can get paid for being an Aspie child

- Social Security - 12.00-Mental Disorders-Adult — Or an Aspie adult

- American Psychological Association (APA) — A professional association of psychologists and others

- Prevalence of Autism Spectrum Disorders — Autism and Developmental Disabilities Monitoring Network, 14 Sites, United States, 2008 — A report suggesting an ASD prevalence among children of 1 in 88 (2008)

- An Insider's Perspective — A school psychologist under pressure to produce diagnoses

| Home | | Psychology | |  |  |  Share This Page Share This Page |